Large language models (LLMs) like GPT-4 have never smelled a rain-soaked campsite — yet ask one to describe it, and it might spin a vivid scene: “The air is thick with anticipation, laced with a scent that is both fresh and earthy.” How does a machine with no nose, no sensory input, and no first-hand experience of the world generate language that feels grounded in reality?

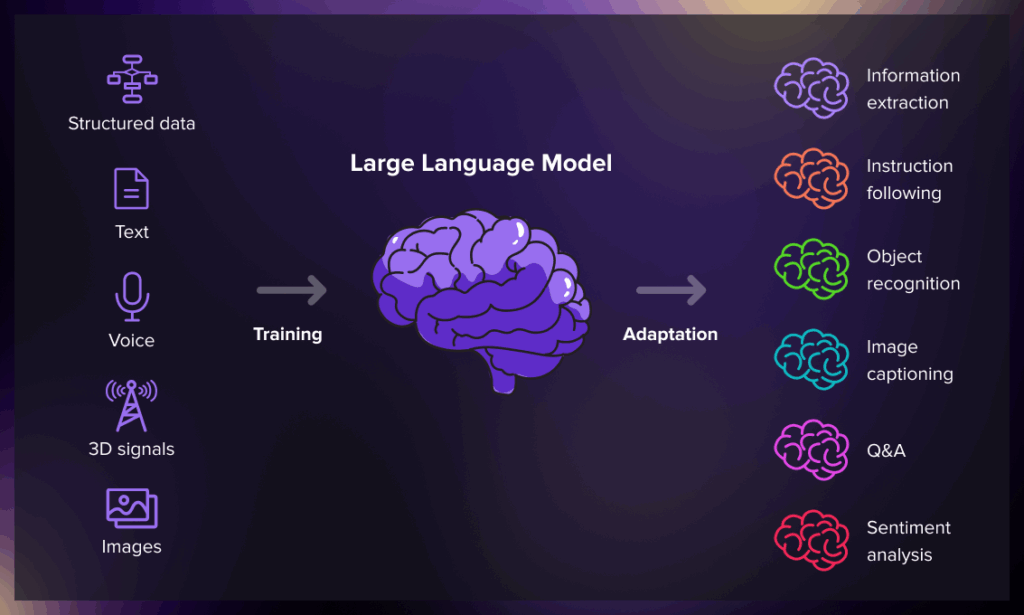

The default explanation is mimicry. These models are trained on vast quantities of text and are thought to simply remix patterns found in their training data. But new research from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) suggests something more may be going on — that LLMs might develop internal models of reality to improve their linguistic performance.

From Babbling to Understanding

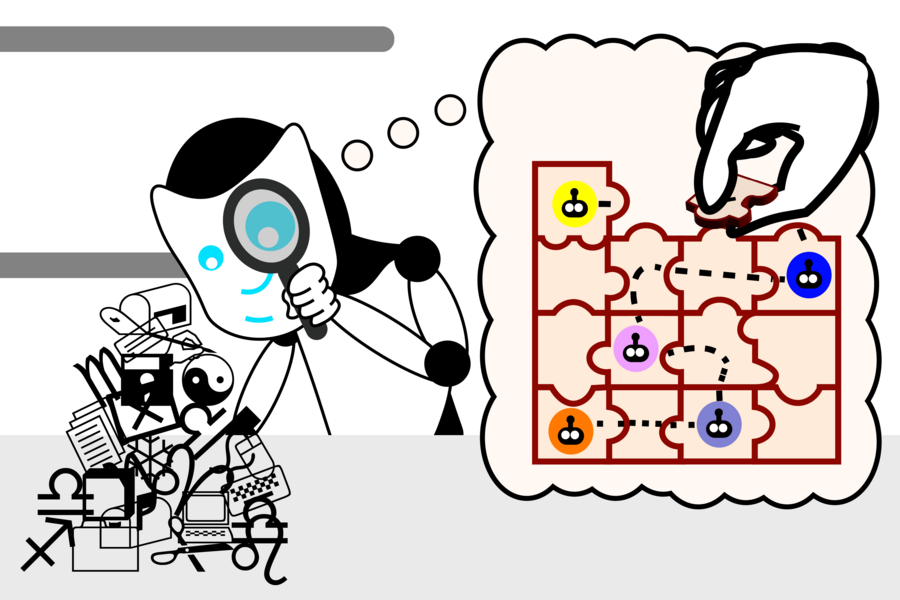

In a controlled experiment, CSAIL researchers trained a language model on solutions to a set of virtual robot navigation puzzles called Karel. The model was given correct instructions but was never shown how those instructions worked in the simulated environment.

To probe what was happening inside the model, the team used a machine learning technique aptly called probing — a way to peek into the internal representations of the LLM and determine whether it was forming meaningful concepts.

What they found was striking: despite never being trained on the actual simulation mechanics, the LLM developed an internal representation of the environment — an implicit simulation of the robot’s behavior. Over time, its performance improved dramatically. By the end of training, it was solving puzzles correctly 92.4% of the time.

“At the start, the model generated random instructions that didn’t work,” said Charles Jin, lead author of the study and a PhD student at MIT EECS. “But eventually, it was producing valid solutions almost every time. That gave us a reason to believe it wasn’t just generating text — it was understanding the meanings embedded in that text.”

Simulations Within Simulations

As the model’s performance improved, the internal probe revealed a shift: it began to encode the effects of each instruction on the robot’s position. That is, without ever seeing the robot move, the LLM seemed to intuit how its commands would play out in the simulated world — suggesting a kind of emergent understanding.

Jin likens the model’s progress to a child learning language. “It starts with babbling, then acquires syntax, and finally, meaning. That’s what we observed.”

But how could they be sure that it was the model — and not the probe — doing the real work?

A “Bizarro World” Sanity Check

To disentangle the model’s reasoning from the probe’s inference, the team devised a clever twist. They trained a second probe in a “Bizarro World,” where the meanings of instructions were flipped — for instance, “up” meant “down.”

If the probe were merely translating instructions into robot movements, it would succeed just as well in this reversed world. But it didn’t. The new probe failed to interpret the model’s latent representations correctly — suggesting the semantics were embedded within the model itself, not reconstructed by the probe.

This, the researchers argue, supports the idea that the LLM had developed its own internal simulation of the environment — a reality-modeling ability that emerged spontaneously through language learning.

A Glimpse Beyond Syntax

“This research targets a central question in AI,” said Martin Rinard, MIT EECS professor and senior author of the paper. “Are LLM capabilities just statistical tricks, or are they building something deeper? Our results suggest the latter — that models develop internal structures to reason about tasks they’ve never explicitly seen.”

Still, the authors are cautious. The programming language used in the study was simple, and the model relatively small. Follow-up work will explore whether the same effects emerge in larger models or more complex settings.

An open question remains: does the model use its internal simulation to reason about reality, or is the simulation merely a byproduct of language training? “While our results are consistent with the former,” Rinard says, “they don’t yet prove it.”

A Philosophical Frontier

Outside experts see the work as a valuable step in a bigger debate.

“There’s ongoing discussion about whether LLMs truly ‘understand’ language or just approximate meaning through surface-level pattern matching,” said Ellie Pavlick, a computer science and linguistics professor at Brown University. “This paper addresses that question in a controlled setting. The design is elegant, and the findings are optimistic — suggesting that maybe LLMs really can learn something deeper about what language means.”

While language models still can’t smell the rain, they may be forming mental models of the world that help them write about it — and perhaps, one day, understand it.