LG AI Research has introduced EXAONE Deep, a new large language model designed to excel in mathematics, science, and coding. Despite its relatively compact architecture, EXAONE Deep is showing strong signs of being a global contender in the rapidly evolving field of reasoning-centric AI.

Challenging the Global Elite in Reasoning AI

Only a select few institutions worldwide have made significant strides in developing reasoning-capable foundational models. LG aims to join their ranks with EXAONE Deep, positioning it as a direct competitor to leading global models. The Korean tech giant has focused this latest effort on dramatically improving logical reasoning and cross-domain understanding — a leap that has already been recognised internationally.

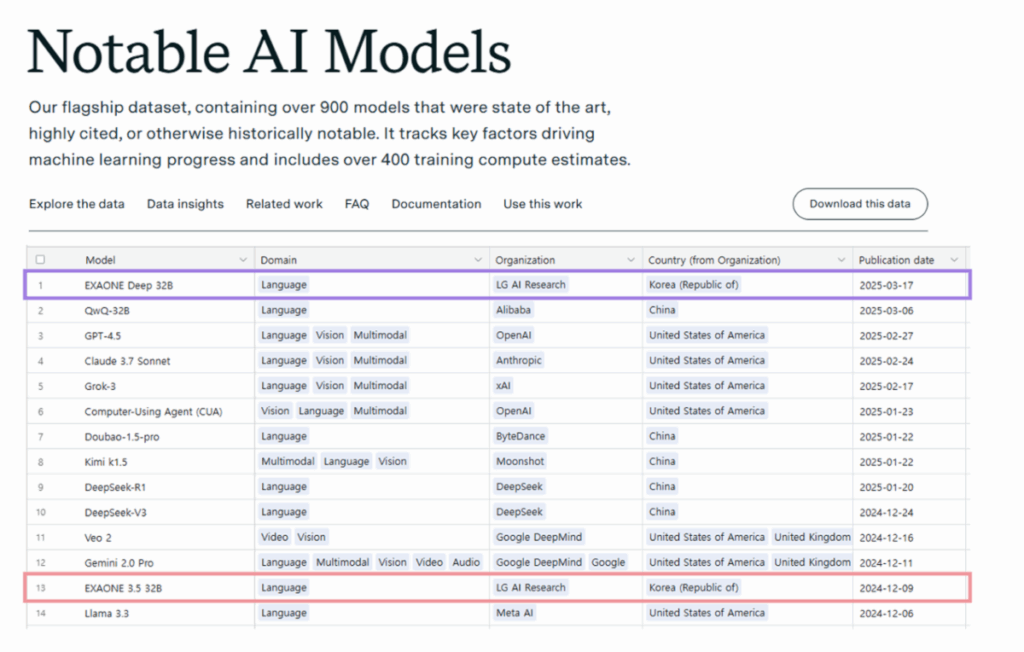

Shortly after launch, EXAONE Deep was named to Epoch AI’s “Notable AI Models” list, a recognition previously given to its predecessor, EXAONE 3.5. LG is now the only Korean organization with models featured on this prestigious list for two consecutive years.

Small Models, Big Results

Despite the model’s compactness — the top-tier version has 32 billion parameters — EXAONE Deep is delivering standout results.

- Mathematics:

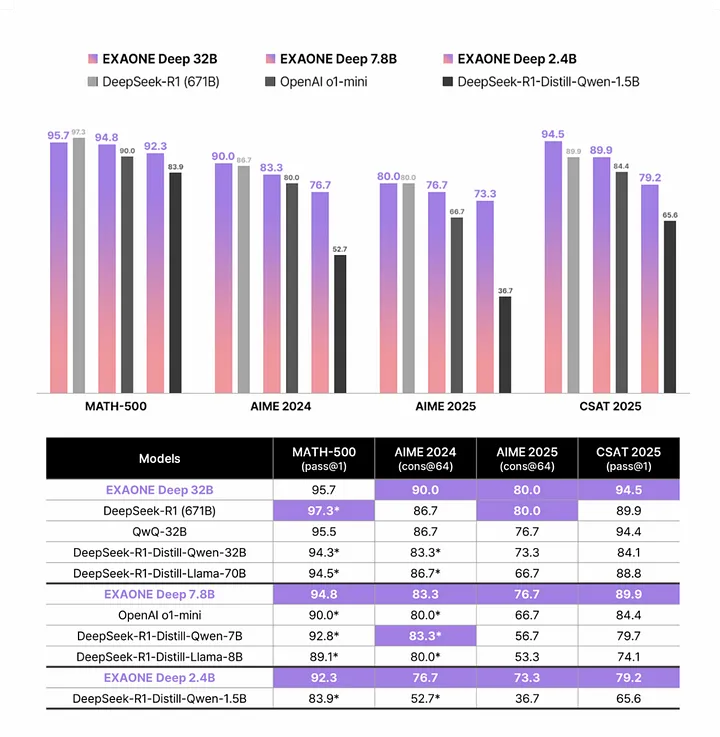

The 32B model outperformed a much larger competitor in a challenging math benchmark, while the 7.8B and 2.4B versions secured first place in major math benchmarks for their respective sizes.

Notably, in the 2025 AIME (American Invitational Mathematics Examination), the 32B model matched the performance of DeepSeek-R1, a colossal 671B model — a testament to EXAONE Deep’s efficient learning and reasoning power. - Scores by model size:

- 32B: 94.5 (general math), 90.0 (AIME 2024), matched DeepSeek-R1 on AIME 2025

- 7.8B: 94.8 (MATH-500), 59.6 (AIME 2025)

- 2.4B: 92.3 (MATH-500), 47.9 (AIME 2025)

Science and Coding Strength

The model also shines in scientific reasoning and software development:

- GPQA Diamond (doctoral-level science reasoning):

- 32B: 66.1

- 7.8B & 2.4B: top-ranked in their categories

- LiveCodeBench (real-time coding proficiency):

- 32B: 59.5

- 7.8B & 2.4B: both secured first in their size classes

These achievements build on the success of EXAONE 3.5 2.4B, which previously topped Hugging Face’s LLM Readerboard in the on-device edge model division.

Stronger General Knowledge, Too

EXAONE Deep is not just a domain-specific expert. It also shows impressive general intelligence, scoring 83.0 on the MMLU (Massive Multitask Language Understanding) benchmark — the best performance by a Korean model to date. This suggests that the improvements to reasoning capabilities also enhance its ability to handle a broader range of topics.

Toward More Capable and Accessible AI

LG AI Research sees EXAONE Deep as a milestone in building AI systems that can reason, solve problems, and generate knowledge with efficiency — all while keeping model size manageable. The goal is to create models that are not only powerful but also accessible for practical applications, including education, research, and edge deployment.

“EXAONE Deep represents our ongoing commitment to making AI smarter and more useful,” said LG AI Research, adding that continued innovation in this space could significantly enrich human lives in the years to come.